Models & Frontiers

Model comparisons, training approaches, open source developments, and research frontiers. The cutting edge of AI capability.

Key Guides

The Inference Budget Just Got Interesting

OpenAI's o1 model uses 300x more inference compute than GPT-4 to solve hard math problems. That's not a bug. It's a design choice. But here's what the...

Synthetic Data Won't Save You From Model Collapse

The AI industry's running out of internet. Every major lab's already scraped the same corpus, and the easy gains from scaling data are tapering. The...

MoE Models Run 405B Parameters at 13B Cost

When Mistral AI dropped Mixtral 8x7B in December 2023, claiming GPT-3.5-level performance at a fraction of the compute cost, the reaction split cleanly...

The Inference Budget Just Got Interesting

OpenAI's o1 made headlines for "thinking harder" during inference. But the real story isn't that a model can spend more tokens on reasoning: it's that...

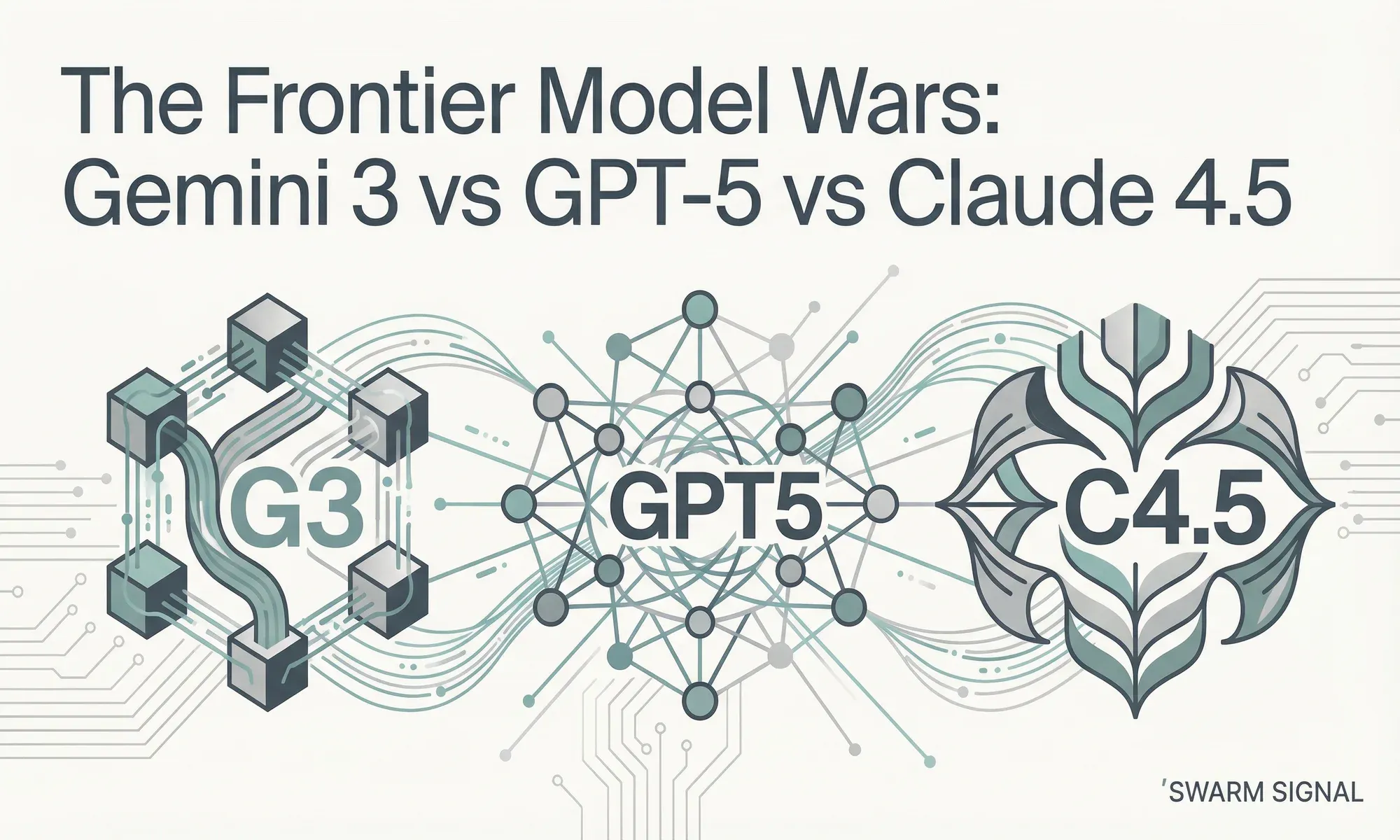

Mixture of Experts Explained: The Architecture Behind Every Frontier Model

Every frontier model released in the last 18 months uses Mixture of Experts. DeepSeek-V3 activates just 37 billion of its 671 billion parameters per token. Understanding how MoE works isn't optional anymore.

Inference-Time Compute Is Escaping the LLM Bubble

LISTEN TO THIS ARTICLE Your browser does not support the audio element. Inference-Time Compute Is Escaping the LLM Bubble By Tyler Casey · AI-assisted research & drafting · Human editorial oversight @getboski Flow Matching models just got 42% better at protein generation without retraining. The technique? Throwing more compute at inference rather

China's Qwen Just Dethroned Meta's Llama as the World's Most Downloaded Open Model

The numbers don't lie. In 2025, Qwen became the most downloaded model series on Hugging Face, ending Meta's Llama reign as the default choice for open-sour

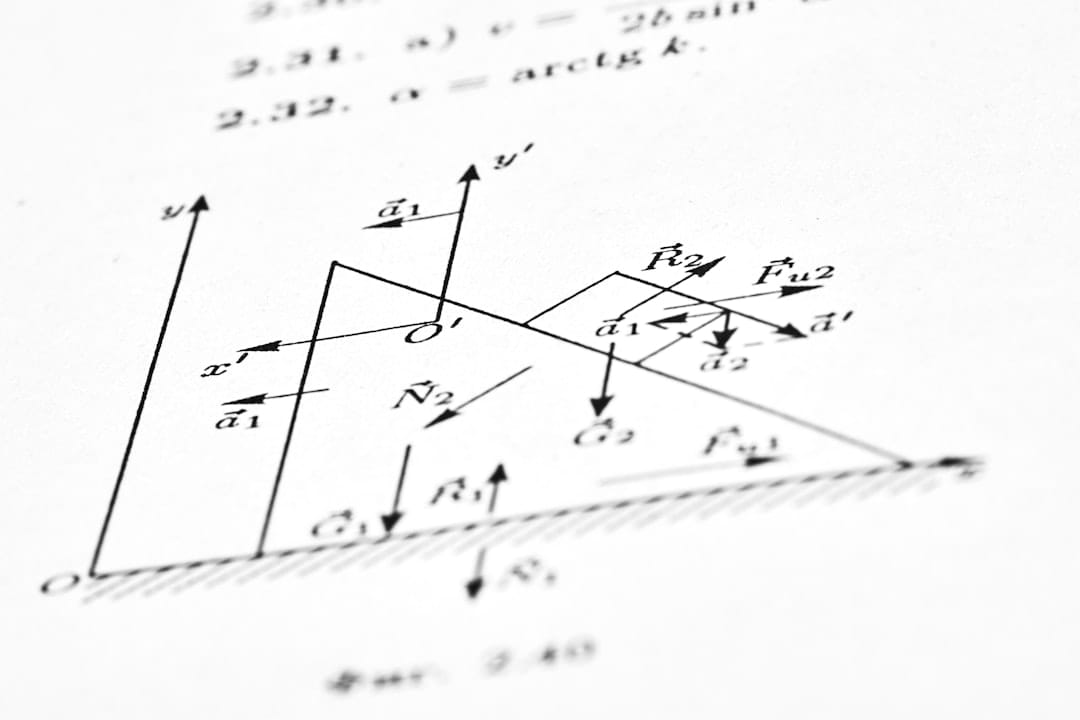

The Frontier Model Wars: Gemini 3 vs GPT-5 vs Claude 4.5

Google's Gemini 3 Pro scores 91.9% on GPQA Diamond, giving it nearly a 4-point lead over GPT-5.1's 88.1%. But Clarifai's model comparison shows Claude achi

2026 Is the Year of the Agent. Here's What the Data Actually Says

Every major cloud vendor and analyst firm agrees: 2026 is the year AI agents go from pilot to production. The data backs them up, but it also reveals the gap between adoption and outcomes is wider than anyone's admitting.

From Lab to Production: Why the Last Mile of AI Deployment Is Actually a Marathon

The models have never been better. The deployment rate has never been worse. What's actually breaking between 'it works in a notebook' and 'it runs in production.'